Our Journey of Building a Gateway Service

Introduction

At Quizizz, our mission is to empower educators to engage learners to help improve their learning outcomes. A core part of our platform is the ability for teachers to conduct live gamified quizzes in classrooms. Since these activities are happening live, it is essential to have no disruptions and a smooth and frictionless user experience for every student.

One of the most pressing problems that we have been facing was DoS (Denial of Service) attacks. In this blog post, I’m going to talk about what we did in order to negate the consequences of DoS attacks and our learnings from this effort.

Our current system

As we have been growing and amassing new users, the frequency of such attacks is also growing. In our initial days, we found quicker and cost-efficient solutions to manage these attacks. Our quick solutions were to use AWS WAF (Web Application Firewall) and custom application-level rate limiters in each service.

As the frequency and complexity of these attacks increased, and our infrastructure became more complex, we had to find a permanent solution. Our current solutions still let DoS attacks through, mostly due to WAF’s 5-minute window being too long for detecting DoS attacks before they propagate to our services. Additionally, WAF has started becoming prohibitively expensive as our traffic increases as well.

Problems

Failure to block all DoS attacks

We have been facing DoS attacks for some time now, AWS WAF (Web Application Firewall, a managed DoS protection service by AWS) wasn’t fully effective in stopping these attacks and quite a few of them would leak into our system, which would cause scaling up of our services, high resource/CPU usage on individual containers, and would have cascading effects into our entire system, generally resulting in instability to users.

Application level rate limiters and quick fixes proved ineffective since attackers would change strategy and start attacking different endpoints/services. We needed an effective single service in front of all our services to handle DoS attacks by rate limiting based on multiple attributes, such as login state, IP address, HTTP method/path/headers, etc.

Duplication of logic in multiple services

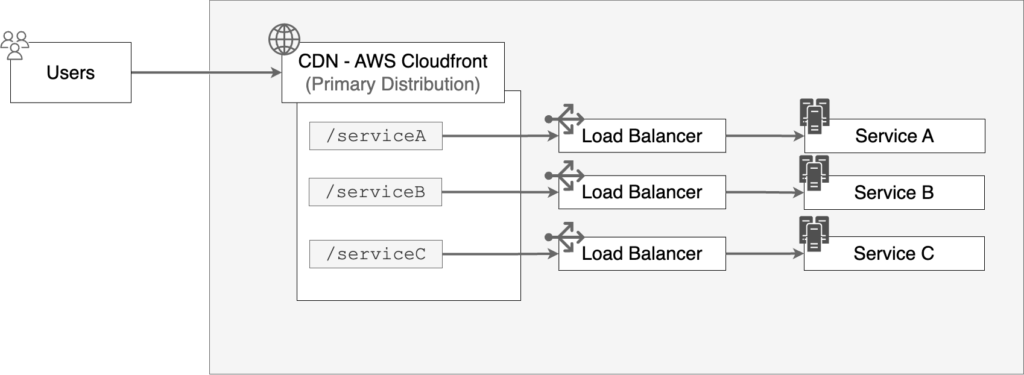

In our current infrastructure, traffic is routed to different load balancers behind our CDN.

While this is a simpler way of building our platform, this has a huge downside. We have little to no control over how requests are routed, and we have no place to write business logic, such as authentication, which is common to all services.

The result of this is that over time individual microservices started implementing their own versions of the same business logic. Changes to the common layers require similar changes in all services which can over time cause bugs and increase tech debt. These bugs can have severe consequences such as security vulnerabilities.

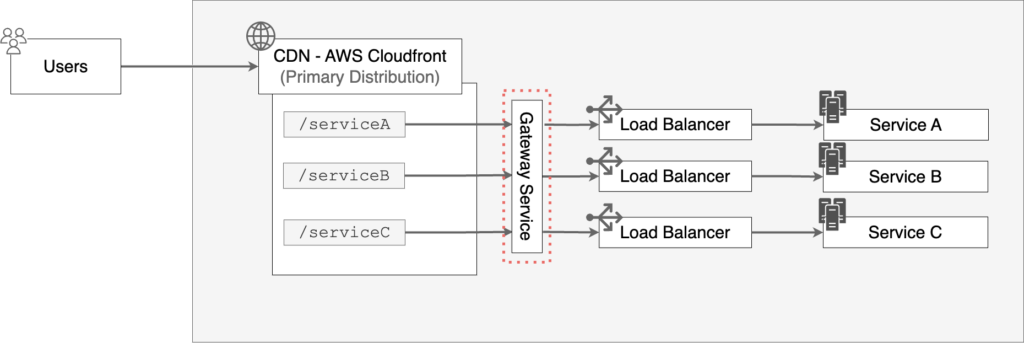

The solution is to have a common entry point, the gateway service, to the system which serves as a middleware to all our requests.

Heavy reliance on AWS restricting customizability, ballooning costs

We use AWS Application Load Balancers for a number of interesting platform features that enhance developer experience and provide developers with ways to test and experiment with their code without releasing it to actual users. This strategy has worked out so far but as we continue to grow quickly, we have been facing issues with AWS services quota limits, and costs.

We realized that to continue forward in our growth, providing speed to developers, and ensuring Quizizz remains a reliable and highly available platform while also ensuring cost efficiency, we’d need to move parts of our platform away from AWS services towards cloud-native solutions.

We decided to move forward with building a custom gateway service with our own implementation of a rate limiter, deploying it on Kubernetes while using a cloud-native tech stack.

Gateways, Kong: What are these?

An application gateway is a networking component that acts as an intermediary between users and web applications. It provides secure and scalable access to web applications by offering functionalities such as load balancing, SSL/TLS termination, and web application firewall (WAF) capabilities. It serves as a central entry point, routing incoming requests to the appropriate backend servers, and can also provide features like session affinity and URL-based routing. Overall, an application gateway helps improve performance, reliability, and security for web applications by managing traffic and enforcing policies at the application layer.

Kong Gateway is an open-source, cloud-native API gateway that acts as a central point of control for managing and securing API traffic. It enables organizations to efficiently manage their APIs by providing capabilities such as authentication, rate limiting, request/response transformations, and traffic control. Kong Gateway acts as a middleware layer between clients and backend services, ensuring reliable and secure communication. With its extensive plugin ecosystem, it offers flexibility and extensibility to customize and enhance API functionality. By simplifying API management tasks, Kong Gateway empowers developers to focus on building robust and scalable applications while ensuring high performance and security for their APIs. There is a lot more to say about Kong than what I have summarized and if you’re interested, this is a great start.

Kong needs a persistent data store where it can store rate limits, while there are many options for these, one of the more popular options is Redis, which we used.

Why we built our own rate limiter

Kong provides a default rate limiter, but it didn’t fill our use case completely. There were many features we wanted that required custom implementation, such as:

Redis Cluster Support

Quizizz gets ~750k RPM peak traffic, and DoS attacks can go into 10s of millions of RPM. This meant we can’t rely on a single Redis node to guarantee high availability and performance. We wanted a large Redis cluster to ensure Redis didn’t crash during DoS attacks.

Configurable Time Windows

The default rate limiter provides only a small set of time windows on which rate limits can be configured. For our use case, we realized that a one-minute window is too long, and within that period of time, an attacker can cause harm to our system, and hamper other user’s experience. A one-second window, while important, would not be enough since we’d like to block attacks for a longer period of time. This meant we wanted configurability on n second windows.

IP Blacklisting/whitelisting

Blacklisting and whitelisting IP addresses is an important part of our rate limiter that can power multiple features. For example, we need to whitelist our own custom IPs. We may also whitelist IPs for certain users if they have special cases that require rate limits to be relaxed for a small period of time. Furthermore, we are working on improving our system so that it provides a captcha instead of an error when a certain IP is rate limited. If the IP completes the captcha, we can then whitelist it for a short period of time. Another interesting feature is to blacklist certain IPs which we know are malicious.

Custom logic for different rate limits

While we want to block DoS attacks, we also want to ensure that this doesn’t hamper the experience of legitimate users. Legitimate user requests follow certain patterns that DoS attacks generally don’t, for example, DoS attacks generally use unauthenticated requests, hit the same endpoint, etc.

There is no definitive way to tell whether certain activity is legitimate or not, and we cannot write a rate limiter that would never block a legitimate user request, but we can understand how users use our platform, and build a rate limiter that minimizes the probability that a user request is returned with a 429 response.

Fault tolerance

One of our core emphasis while building this service was to ensure that requests don’t get blocked in the case of a failure in Gateway service. To put it simply, we understand that code can and will fail, but it shouldn’t impact how users experience on our platform.

This meant we had to ensure fallbacks and write custom code to handle anything and everything that can happen. So for example, if Redis crashes, requests shouldn’t fail and instead start routing without the rate-limiting logic. The default rate limiter wouldn’t handle all such cases and hence we had the need to build our own.

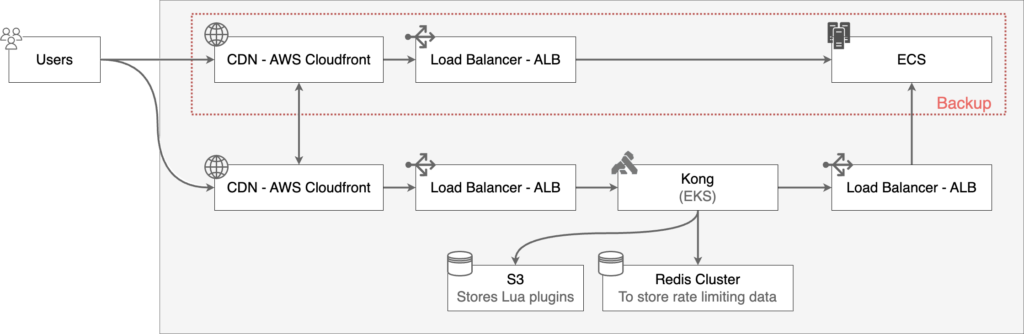

Architecture

We created staging deployments based on a custom header using Cloudfront’s continuous deployment feature. This allowed us to initially start sending our own traffic to Kong. Once we were confident, we started sending small amounts of user traffic based on percentage on a single route, gradually increasing traffic percentage. All this while, we started doing small load and simulation tests on the gateway service while monitoring it to see how it would react.

Cloudfront staging distribution only allows up to 15% of traffic to be routed to the staging deployment. To increase it beyond 15%, we used Lambda@Edge. We wrote a lambda function that would change the origin of the request using custom logic. Using this, we were able to configure request routing very granularly, constantly updating it based on our requirements and testing. After gradual increases over a long period of time, we finally released gateway service to 100% of traffic with the older CDN distribution

All this time, we had enabled shadow mode on our custom rate limiter, where it would not block any requests, instead updating a metric when a certain IP crosses the rate limit. Using this, we were able to fine-tune our rate limiter logic and decide on time windows and rules. Eventually, we disabled shadow mode once we had finalized the rate limiter rules.

To scale pods, we used Horizontal Pod Autoscaler(HPA), and to scale nodes, we used Cluster Autoscaler(CAS)

Roadblocks

Out Of Memory (OOM) Restarts

During testing Kong, gateway service pods failed to restart after crashing due to OOM errors. This was mostly caused by a bug in Kong due to which containers wouldn’t delete a socket connection file after dying, and hence new containers couldn’t spin up since the ports wouldn’t be open. This bug was fixed in the later versions of Kong.

Conclusion

In summary, our current system was vulnerable to DoS attacks and regular attacks causing degradation of user experience while increasing infrastructure costs. AWS WAF was proving to be ineffective against larger attacks due to its long 5-minute time window. We implemented a common gateway layer using Kong which could rate limit requests on configurable time windows. This also allowed us to move common business logic from multiple services to a single service, therefore cleaning up our architecture while reducing the occurrence of DoS attacks. While this has not completely solved the problem for us, it was a good first step in the right direction.